Universal Adversarial Perturbations Generation for Speaker Recognition

| Jiguo Li | Xinfeng Zhang | Chuanmin Jia | Jizheng Xu | Li Zhang | Yue Wang | Siwei Ma✝ | Wen Gao |

|

|

ICT,CAS |

|

✝PKU |

|

UCAS |

|

Bytedance |

Abstract

Attacking deep learning based biometric systems has drawn more and more attention with the wide deployment of finger-print/face/speaker recognition systems, given the fact that the neural networks are vulnerable to the adversarial examples, which have been intentionally perturbed to remain almost imperceptible for human. In this paper, we demonstrated the existence of the universal adversarial perturbations (UAPs) for the speaker recognition systems. We proposed a generative network to learn the mapping from the low-dimensional normal distribution to the UAPs subspace, then synthesize the UAPs to perturb any input signals to spoof the well-trained speaker recognition model with high probability. Experimental results on TIMIT and LibriSpeech datasets demonstrate the effectiveness of our model.

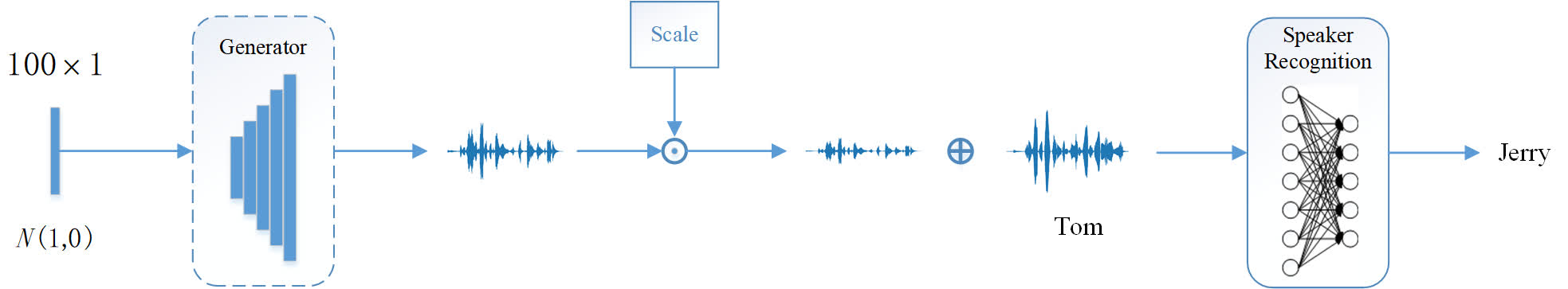

Framework

|

|

Illustration of our framework. We train a generator, which is a multi-layer CNN model, to synthesize universal adversarial perturbations (UAPs) from Gaussian noise. The UAPs are additive noise with a scale factor. The victim model is a state-of-the-art speaker recognition model.

|

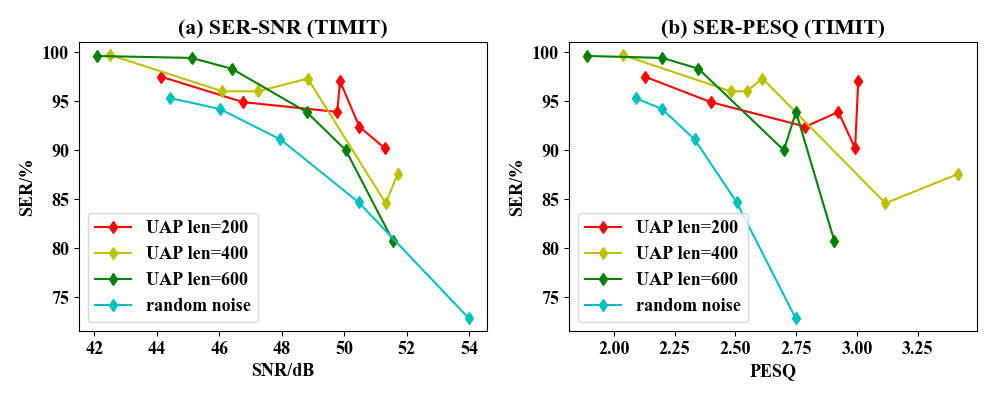

Comparison with Gaussian noise

|

|

Comparison with Gaussian noise. We can see that our UAPs can achieve better attack performance, demonstrating that our generator has learned the universal adversarial patterns.

|

Results on the non-targeted attack

Here we show the results with $\lambda=1500$.| The origin speech | prediction | The adversarial example | prediction | ground truth |

| fcjf0 | 102 | fcjf0 | ||

| fcjf0 | 102 | fcjf0 | ||

| fcjf0 | 102 | fcjf0 | ||

| fdaw0 | 102 | fdaw0 | ||

| fdaw0 | 102 | fdaw0 | ||

| fdaw0 | 102 | fdaw0 | ||

| faem0 | 38 | faem0 | ||

| faem0 | 84 | faem0 | ||

| faem0 | 84 | faem0 | ||

| fbcg1 | 172 | fbcg1 | ||

|

Non-targeted attack results on TIMIT dataset.

|

||||

Paper

|

"Universal Adversarial Perturbations Generation for Speaker Recognition", Jiguo, Li, Xinfeng Zhang, Chuanmin Jia, Jizheng Xu, Li Zhang, Yue Wang, Siwei Ma, Wen Gao [Arxiv] [IEEE Xplore] |

Codes for This Paper

The codes can be found on github.

Acknowledgment

| The authors would like to thank Jing Lin, Junjie Shi for helpful discussion.

|